CS: APP--Chapter05: optimizing program performance (part 1)

- CS: APP--Chapter05: optimizing program performance (part 1)

- prologue

- 1. capabilities and limitations of optimizing compiler

- 1.1 memory aliasing

- 1.2 function calls

- 2. expressing program performance

- 2.1 cycle per element versus pico&nano-second

- 2.2 CPE versus CPS

- 3. program example

- 4. eliminating loop inefficiencies

- 5. reducing procedure calls

- solution for case 1

- solution for case 2

- 6. eliminating unneeded memory references

- 7. understanding modern processors

- 7.1 overall operation

prologue

The primitive object of a programmer is to make it run correctly even fast. Not only do we ensure others can make sense of it, but also others can understand the code during review and when some modifications are needed.

Several types of activities for optimizing the program are necessary :

- select an appropriate set of data structures and algorithm

- write code in a way that we assist the compiler to turn source code into an efficient executable code

- parallelism ( detail in chapter 12 )

This chapter focus on one ultimate goal - how to make code run correctly and faster via several different types of program optimization. Eliminating the unnecessary work that has nothing to do with the processor and executing the divided work in parallel that highly depends on the architecture of the processor make our code run faster. For increasing the degree of parallelism with which we execute our code, more information will be discussed in chapter 12.

And a linear process of applying a series of transformations to code in a particular order is my learning objective.

A good way of gaining a better understanding of optimization is by looking into the assembly language with the optimization level Og and O1 and even higher.

1. capabilities and limitations of optimizing compiler

Here, a compiler with a growing ability of optimization would not optimize code in an aggressive and radical way due to its constraints. The compiler must adopt a safe optimization.

1.1 memory aliasing

In many cases, the probability of referring to an identical memory makes the compiler runs with a safe optimization.

An example is proposed on page 535 that adding two numbers twice is for a factor of 4 to one variable, which runs the same as 4 times with fewer operations at the assembly-language level. But the compiler cannot optimize the first case directly to the second case, because the compiler doesn't ensure whether or not they refer to the identical memory leading to the erroneous result.

1.2 function calls

another optimization block here is optimizing multiple calls of one function to fewer times of calls for the function may leading to side effects here. If there is a global variable, it must be modified several times to change program behavior named by side effect.

In this case, compile assumes the worst case and just leaves the function calls intact(unchanged).

Even though many types of optimization exploit the program to their full extent, the constraints of compilers limit the set of optimization.

Inline substitute (inlining)

Substituting function calls by the body of the function can improve the performance when the function is simple. It may bring on the problem as the code is being debugged because the trace for the function will be lost as well as profiling when the function with inline substitution will be profiled incorrectly.

2. expressing program performance

Like the metric throughout and latency in the last chapter, a metric "cycles per element" is introduced here, abbreviated by CPM and Especially for loop behavior.

2.1 cycle per element versus pico&nano-second

The time required by the procedure is characterized as a constant plus a factor proportional to the number of elements processed.

2.2 CPE versus CPS

In comparison with the metric "cycles per iteration", CPE focus on the performance of the whole procedure rather than the repeated parts.

-> least squares fit to find the expression for example 60+35n

3. program example

My understanding of optimizing programs:

- identify where the inefficiencies are

- a series of transformations implemented in a particular order

prerequisite:[malloc() && calloc()4

An example of vector abstract data type is demonstrated :

To gauge the performance, CPE is used to reveal how long each procedure takes in terms of data types and operation. One point emphasized at the beginning of this chapter is that selecting an appropriate data type and operation such as shifting a combination of arithmetic operations to shift operations can make a big difference in program performance.

One way of handing control on to the programmer is specifying the optimization level from Og with the basic optimization to O2 or even higher. A big leap from O0 to O1 as the option of optimization level.

4. eliminating loop inefficiencies

Observe the test condition of the for loop comprising of the call of function vec_length, every time the test condition is executed, the call of vec_length gets executed. And the value of vec_length does not change as the loop proceeds.

| step | description |

|---|---|

| 1 | long length = vec_length(*vect_ptr); |

| 2 | i < length |

In this case, the computation for some variables is moved to an earlier section of the code that does not get evaluated as often. This kind of optimization is known as code motion.

Note: As a result of the limitation of a compiler for safe optimization, the compiler won't perform in an aggressive way. Then we need to assist the compiler to turn source code into effective machine code. On the other hand, some types of ambiguities can be removed by writing code in a smart way.

| inefficiencies | limitation of compiler | our implements |

|---|---|---|

| call function as the loop proceeds | compiler cannot determine whether or not this call has side effect. | code motion ? loop unrolling? |

5. reducing procedure calls

the sources of inefficiency are listed below based on combine1():

- every time vec_length() of test condition of for loop is called as for loop proceeds.

- retrieving the element in data array involves the boundart checking every time

solution for case 1

code motion for length moved to an early block of code that is not evaluated as often.

solution for case 2

bounds checking seems unnecessary in this case because all possible elements in the test condition are valid.

The result turns out to be different than we have thought, both implements have almost the same performance indeed. But at the algorithmic level, the latter case should perform better than the original one.

6. eliminating unneeded memory references

Up to this point, more understanding for assembly language is demanded. For combine3() over here, the value designated by pointer desk is read after written into at the end of last iteration. This reading and writing is so wasteful because both values are definitely equal.

within loop block:

Thanks to the property of movement operation with not only computing effective address but also perform arthimetic operation. Besides, the introduction of one local variable just accelebrate the whole process.

The demostration above focus on the optimization of program behavior, but the succeeding section will focus on processor architecture to find more for further improvement.

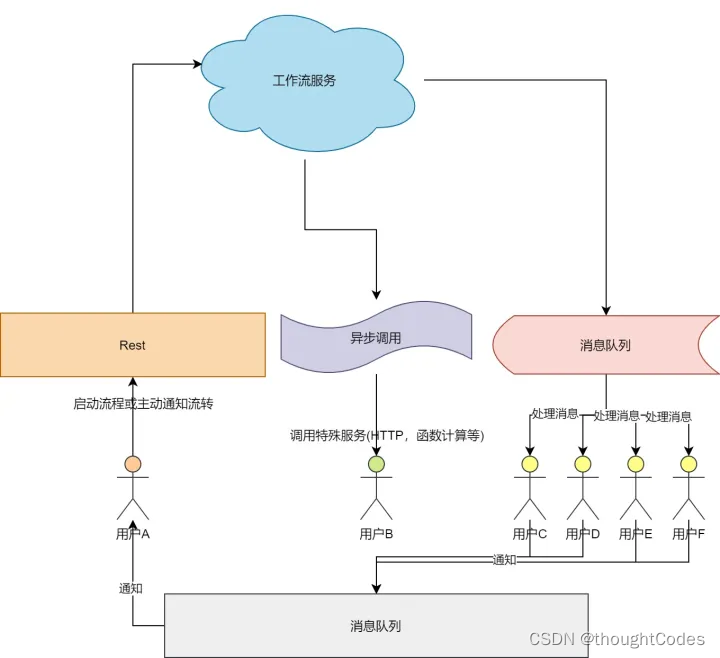

7. understanding modern processors

Unlike the optimization over program itself, seeking the optimization by exploiting the processor to its full extents needs to understand the processot architecture.

As same as the difference between high-level program and assembly program, the processing of what source code want to achieve orginally is far different from what processor actually implement at machieve level.parallelism provides a special view to run several instructions simultaneously rather than a uniform series of stages from the updating pc to write back.

After it, two different lower bounds characterize the maximum performance of a program.

- latency bounds :

- throughput bounds :

From my own perspective, it is equivalent to the concept: barrier.

7.1 overall operation

Modern processors more complicated than the pipeline processor in chapter 4 is described as superscalar because they can execute loans of instruction on every clock cycle and particularly out of order( different from the order of the machine-level program. )