by Kirill Dubovikov

通过基里尔·杜博维科夫(Kirill Dubovikov)

使用TensorFlow进行机器学习即服务 (Machine Learning as a Service with TensorFlow)

Imagine this: you’ve gotten aboard the AI Hype Train and decided to develop an app which will analyze the effectiveness of different chopstick types. To monetize this mind-blowing AI application and impress VC’s, we’ll need to open it to the world. And it’d better be scalable as everyone will want to use it.

想象一下:您已经登上AI Hype Train,并决定开发一个应用程序,该应用程序将分析不同筷子类型的有效性。 为了通过这个令人兴奋的AI应用货币化并打动VC,我们需要向世界开放。 而且最好是可扩展的,因为每个人都想使用它。

As a starting point, we will use this dataset, which contains measurements of food pinching efficiency of various individuals with chopsticks of different length.

首先,我们将使用此数据集,其中包含使用不同长度的筷子对各个人的食物捏捏效率的度量。

建筑 (Architecture)

As we are not only data scientists but also responsible software engineers, we’ll first draft out our architecture. First, we’ll need to decide on how we will access our deployed model to make predictions. For TensorFlow, a naive choice would be to use TensorFlow Serving. This framework allows you to deploy trained TensorFlow models, supports model versioning, and uses gRPC under the hood.

由于我们不仅是数据科学家,而且是负责任的软件工程师,因此我们将首先草拟我们的体系结构。 首先,我们需要决定如何访问已部署的模型进行预测。 对于TensorFlow,天真的选择是使用TensorFlow Serving 。 该框架允许您部署训练有素的TensorFlow模型,支持模型版本控制以及在后台使用g RPC 。

The main caveat about gRPC is that it’s not very public-friendly compared to, for example, REST services. Anyone with minimal tooling can call a REST service and quickly get a result back. But when you are using gRPC, you must first generate client code from proto files using special utilities and then write the client in your favorite programming language.

关于gRPC的主要警告是,与REST服务相比,它并不十分公共友好。 使用最少工具的任何人都可以调用REST服务并快速获得结果。 但是,当您使用gRPC时,必须首先使用特殊的实用程序从原型文件生成客户端代码,然后以您喜欢的编程语言编写客户端。

TensorFlow Serving simplifies a lot of things in this pipeline, but still, it’s not the easiest framework for consuming API on the client side. Consider TF Serving if you need lightning-fast, reliable, strictly typed API that will be used inside your application (for example as a backend service for a web or mobile app).

TensorFlow Serving简化了该管道中的许多工作,但仍然不是在客户端使用API的最简单框架。 如果您需要在应用程序内部使用的闪电般快速,可靠,严格类型的API(例如,作为Web或移动应用程序的后端服务),请考虑使用TF Serving。

We will also need to satisfy non-functional requirements for our system. If lots of users might want to know their chopstick effectiveness level, we’ll need the system to be fault tolerant and scalable. Also, the UI team will need to deploy their chopstick’o’meter web app somewhere too. And we’ll need resources to prototype new machine learning models, possibly in a Jupyter Lab with lots of computing power behind it. One of the best answers to those questions is to use Kubernetes.

我们还需要满足系统的非功能性要求。 如果很多用户可能想知道他们的筷子有效性水平,我们将需要该系统具有容错能力和可扩展性。 此外,UI团队也需要将自己的筷子网络应用程序部署在某个地方。 而且,我们可能需要在Jupyter实验室中拥有大量计算能力的新原型机器学习模型的原型资源。 这些问题的最佳答案之一是使用Kubernetes 。

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

Kubernetes是一个开源系统,用于自动化容器化应用程序的部署,扩展和管理。

With Kubernetes in place, given knowledge and some time, we can create a scalable in-house cloud PaaS solution that gives infrastructure and software for full-cycle data science project development. If you are unfamiliar with Kubernetes, I suggest you watch this:

有了Kubernetes,只要有足够的知识和一段时间,我们就可以创建可扩展的内部云PaaS解决方案,为全周期数据科学项目开发提供基础架构和软件。 如果您不熟悉Kubernetes,建议您注意以下事项:

Kubernetes runs on top of Docker technology, so if you are unfamiliar with it, it may be good to read the official tutorial first.

Kubernetes在Docker技术之上运行,因此,如果您不熟悉它,最好先阅读官方教程 。

All in all, this is a very rich topic that deserves several books to cover completely, so here we will focus on a single part: moving machine learning models to production.

总而言之,这是一个非常丰富的主题,值得多本书全面介绍,因此在这里我们将集中讨论一个部分:将机器学习模型应用于生产。

注意事项 (Considerations)

Yes, this dataset is small. And yes, applying deep learning might not be the best idea here. Just keep in mind that we are here to learn, and this dataset is certainly fun. The modeling part of this tutorial will be lacking in quality, as the main focus is on the model deployment process.

是的,这个数据集很小。 是的,在这里应用深度学习可能不是最好的主意。 请记住,我们在这里学习,并且该数据集当然很有趣。 本教程的建模部分将缺乏质量,因为主要重点在于模型部署过程。

Also, we need to impress our VC’s, so deep learning is a must! :)

另外,我们需要给VC留下深刻的印象,因此深度学习是必须的! :)

码 (Code)

All code and configuration files used in this post are available in a companion GitHub repository.

这篇文章中使用的所有代码和配置文件都可以在GitHub存储库 y中找到。

训练深筷分类器 (Training Deep Chopstick classifier)

First, we must choose a machine learning framework to use. As this article is intended to demonstrate TensorFlow serving capabilities, we’ll choose TensorFlow.

首先,我们必须选择要使用的机器学习框架。 由于本文旨在演示TensorFlow服务功能,因此我们将选择TensorFlow。

As you may know, there are two ways we can train our classifier: using TensorFlow and using TensorFlow Estimator API. Estimator API is an attempt to present a unified interface for deep learning models in a way scikit-learn does it for a set of classical ML models. For this task, we can use tf.estimator.LinearClassifier to quickly implement Logistic Regression and export the model after training has completed.

您可能知道,有两种方法可以训练分类器:使用TensorFlow和使用TensorFlow Estimator API 。 Estimator API尝试以scikit-learn针对一组经典ML模型的方式为深度学习模型提供统一的接口。 对于此任务,我们可以使用tf.estimator.LinearClassifier快速实现Logistic回归,并在训练完成后导出模型。

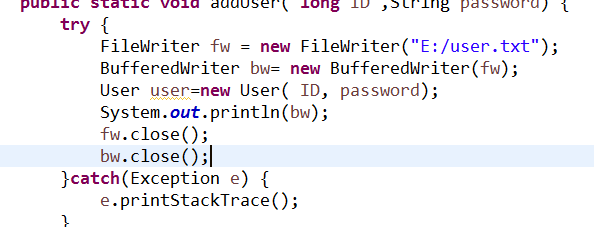

The other way we can do it is to use plain TensorFlow to train and export a classifier:

我们可以做的另一种方法是使用普通的TensorFlow训练和导出分类器:

设置TensorFlow服务 (Setting up TensorFlow Serving)

So, you have an awesome deep learning model with TensorFlow and are eager to put it into production? Now it’s time to get our hands on TensorFlow Serving.

因此,您拥有使用TensorFlow的出色的深度学习模型,并渴望将其投入生产吗? 现在是时候开始使用TensorFlow Serving了。

TensorFlow Serving is based on gRPC — a fast remote procedure call library which uses another Google project under the hood — Protocol Buffers.

TensorFlow Serving基于gRPC(一种快速的远程过程调用库),它在后台使用了另一个Google项目-Protocol Buffers。

Protocol Buffers is a serialization framework that allows you to transform objects from memory to an efficient binary format suitable for transmission over the network.

协议缓冲区是一个序列化框架,使您可以将对象从内存转换为适合通过网络传输的有效二进制格式。

To recap, gRPC is a framework that enables remote function calls over the network. It uses Protocol Buffers to serialize and deserialize data.

回顾一下,gRPC是一个框架,它允许通过网络进行远程函数调用。 它使用协议缓冲区对数据进行序列化和反序列化。

The main components of TensorFlow Serving are:

TensorFlow Serving的主要组件是:

Servable — this is basically a version of your trained model exported in a format suitable for TF Serving to load

可服务的 -这基本上是您训练后的模型的一个版本,以适合TF服务的格式导出

Loader — TF Serving component that, by coincidence, loads servables into memory

加载程序 — TF Serving组件,巧合的是,将可加载项加载到内存中

Manager — implements servable’s lifecycle operations. It controls servable’s birth (loading), long living (serving), and death (unloading)

管理器 -实现servable的生命周期操作。 它控制着servable的出生(装载),寿命长(服务)和死亡(卸载)

Core — makes all components work together (the official documentation is a little vague on what the core actually is, but you can always look at the source code to get a hang of what it does)

核心 -使所有组件协同工作(官方文档对核心的实质有些含糊,但您始终可以查看源代码以了解其功能)

You can read a more in-depth overview of TF Serving architecture at the official documentation.

您可以在官方文档中阅读TF Serving体系结构的更深入概述。

To get a TF Serving-based service up and running, you will need to:

要启动并运行基于TF服务的服务,您需要:

- Export the model to a format compatible with TensorFlow Serving. In other words, create a Servable. 将模型导出为与TensorFlow Serving兼容的格式。 换句话说,创建一个Servable。

- Install or compile TensorFlow Serving 安装或编译TensorFlow Serving

- Run TensorFlow Serving and load the latest version of the exported model (servable) 运行TensorFlow Serving并加载导出模型的最新版本(可服务)

Setting up TernsorFlow Serving can be done in several ways:

设置TernsorFlow服务的方式有多种:

- Building from source. This requires you to install Bazel and complete a lengthy compilation process 从源代码构建。 这需要您安装Bazel并完成冗长的编译过程

- Using a pre-built binary package. TF Serving is available as a deb package. 使用预构建的二进制包。 TF服务以deb软件包的形式提供。

To automate this process and simplify subsequent installation to Kubernetes, we created a simple Dockerfile for you. Please clone the article’s repository and follow the instructions in the README.md file to build TensorFlow Serving Docker image:

为了自动执行此过程并简化后续到Kubernetes的安装,我们为您创建了一个简单的Dockerfile。 请克隆文章的存储库,并按照README.md文件中的说明构建TensorFlow Serving Docker映像:

➜ make build_imageThis image has TensorFlow Serving and all dependencies preinstalled. By default, it loads models from the /models directory inside the docker container.

该映像具有TensorFlow Serving,并且已预安装所有依赖项。 默认情况下,它从docker容器内的/models目录加载模型。

运行预测服务 (Running a prediction service)

To run our service inside the freshly built and ready to use TF Serving image, be sure to first train and export the model (or if you’re using a companion repository, just run the make train_classifier command).

要在新构建并准备使用的TF服务图像中运行我们的服务,请确保首先训练并导出模型(或者,如果您使用的是配套存储库,只需运行make train_classifier命令)。

After the classifier is trained and exported, you can run the serving container by using the shortcut make run_server or by using the following command:

训练并导出分类器后,可以使用快捷方式make run_server或以下命令来运行服务容器:

➜ docker run -p8500:8500 -d --rm -v /path/to/exported/model:/models tfserve_bin-pmaps ports from the container to the local machine-p将端口从容器映射到本地计算机-druns the container in daemon (background) mode-d在守护程序(后台)模式下运行容器--rmremoves the container after it has stopped--rm停止后删除容器-vmaps the local directory to a directory inside the running container. This way we pass our exported models to the TF Serving instance running inside the container-v将本地目录映射到正在运行的容器内的目录。 这样,我们将导出的模型传递给在容器内运行的TF Serving实例

从客户端调用模型服务 (Calling model services from the client side)

To call our services, we will use grpc tensorflow-serving-api Python packages. Please notice that this package is currently only available for Python 2, so you should have a separate virtual environment for the TF Serving client.

为了调用我们的服务,我们将使用grpc tensorflow-serving-api Python软件包。 请注意,该软件包当前仅适用于Python 2,因此您应该为TF Serving客户端提供一个单独的虚拟环境。

To use this API with Python 3, you’ll either need to use an unofficial package from here then download and unzip the package manually, or build TensorFlow Serving from the source (see the documentation). Example clients for both Estimator API and plain TensorFlow are below:

要将此API与Python 3配合使用,您需要从此处使用一个非官方的软件包,然后手动下载并解压缩该软件包,或者从源代码构建TensorFlow Serving(请参阅文档 )。 以下是Estimator API和普通TensorFlow的示例客户端:

Kubernetes投入生产 (Going into production with Kubernetes)

If you have no Kubernetes cluster available, you may create one for local experiments using minikube, or you can easily deploy a real cluster using kubeadm.

如果你没有Kubernetes集群可用的,您可以创建一个使用本地实验minikube ,或者你可以很容易地使用部署一个真正的集群kubeadm 。

We’ll go with the minikube option in this post. Once you have installed it (brew cask install minikube on Mac) we may start a local cluster and share its Docker environment with our machine:

在本文中,我们将使用minikube选项。 一旦安装了它(在Mac上brew cask install minikube ),我们可以启动本地集群并与我们的机器共享其Docker环境:

➜ minikube start...➜ eval $(minikube docker-env)After that, we’ll be able to build our image and put it inside the cluster using

之后,我们将能够使用以下命令构建映像并将其放入群集中

➜ make build_imageA more mature option would be to use the internal docker registry and push the locally built image there, but we’ll leave this out of scope to be more concise.

一个更成熟的选择是使用内部docker注册表并将本地构建的映像推送到此处,但是为了简洁起见,我们将其保留在范围之外。

After having our image built and available to the Minikube instance, we need to deploy our model server. To leverage Kubernetes’ load balancing and high-availability features, we will create a Deployment that will auto-scale our model server to three instances and will also keep them monitored and running. You can read more about Kubernetes deployments here.

生成映像并将其提供给Minikube实例使用后,我们需要部署模型服务器。 为了利用Kubernetes的负载平衡和高可用性功能,我们将创建一个Deployment,该Deployment将模型服务器自动扩展到三个实例,并使它们保持监视和运行状态。 您可以在此处阅读有关Kubernetes部署的更多信息。

All Kubernetes objects can be configured in various text formats and then passed to kubectl apply -f file_name command to (meh) apply our configuration to the cluster. Here is our chopstick server deployment config:

可以以各种文本格式配置所有Kubernetes对象,然后将其传递给kubectl apply -f file_name命令以(meh)将我们的配置应用于集群。 这是我们的筷子服务器部署配置:

Let’s apply this deployment using the kubectl apply -f chopstick_deployment.yml command. After a while, you’ll see all components running:

让我们使用kubectl apply -f chopstick_deployment.yml命令来应用此部署。 一段时间后,您将看到所有组件都在运行:

➜ kubectl get allNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEdeploy/chopstick-classifier 3 3 3 3 1dNAME DESIRED CURRENT READY AGErs/chopstick-classifier-745cbdf8cd 3 3 3 1dNAME AGEdeploy/chopstick-classifier 1dNAME AGErs/chopstick-classifier-745cbdf8cd 1dNAME READY STATUS RESTARTS AGEpo/chopstick-classifier-745cbdf8cd-5gx2g 1/1 Running 0 1dpo/chopstick-classifier-745cbdf8cd-dxq7g 1/1 Running 0 1dpo/chopstick-classifier-745cbdf8cd-pktzr 1/1 Running 0 1dNotice that based on the Deployment config, Kubernetes created for us:

注意,基于Deployment配置,Kubernetes为我们创建了:

- Deployment 部署方式

Replica Set

副本集

- Three pods running our chopstick-classifier image 三个豆荚运行我们的筷子分类器图像

Now we want to call our new shiny service. To make this happen, first we need to expose it to the outside world. In Kubernetes, this can be done by defining Services. Here is the Service definition for our model:

现在,我们要调用我们的新闪亮服务。 为了做到这一点,首先我们需要将其暴露给外界。 在Kubernetes中,这可以通过定义Services来完成。 这是我们模型的服务定义:

As always, we can install it using kubectl apply -f chopstick_service.yml. Kubernetes will assign an external port to our LoadBalancer, and we can see it by running

与往常一样,我们可以使用kubectl apply -f chopstick_service.yml进行安装。 Kubernetes将为我们的LoadBalancer分配一个外部端口,我们可以通过运行来查看它

➜ kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEchopstick-classifier LoadBalancer 10.104.213.253 <pending> 8500:32372/TCP 1dkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1dAs you can see, our chopstick-classifier is available via port 32372 in my case. It may be different in your machine, so don’t forget to check it out. A convenient way to get the IP and port for any Service when using Minikube is running the following command:

如您所见,在我的情况下,我们的chopstick-classifier器可通过端口32372使用。 您的计算机上可能有所不同,所以请不要忘记签出。 使用Minikube时获取任何服务的IP和端口的便捷方法是运行以下命令:

➜ minikube service chopstick-classifier --urlhttp://192.168.99.100:32372推理 (Inference)

Finally, we are able to call our service!

最后,我们可以致电我们的服务!

python tf_api/client.py 192.168.99.100:32372 1010.0Sending requestoutputs { key: "classes_prob" value { dtype: DT_FLOAT tensor_shape { dim { size: 1 } dim { size: 3 } } float_val: 3.98174306027e-11 float_val: 1.0 float_val: 1.83699980923e-18 }}进行实际生产之前 (Before going to real production)

As this post is meant mainly for educational purposes and has some simplifications for the sake of clarity, there are several important points to consider before going to production:

由于此职位主要用于教育目的,并且为了清楚起见而进行了一些简化,因此在投入生产之前,需要考虑以下几点:

Use a service mesh like linkerd.io. Accessing services from randomly generated node ports is not recommended in production. As a plus, linkerd will add much more value to your production infrastructure: monitoring, service discovery, high speed load balancing, and more

使用服务网格(例如linkerd.io) 。 在生产中,建议不要从随机生成的节点端口访问服务。 此外,linkerd还将为您的生产基础架构增加更多价值:监视,服务发现,高速负载平衡等

- Use Python 3 everywhere, as there is really no reason to use Python 2 now 随处使用Python 3,因为现在实际上没有理由使用Python 2

- Apply Deep Learning wisely. Even though it is a very general, spectacular, and widely applicable framework, deep learning is not the only tool at the disposal of a data scientist. It’s also not a silver bullet that solves any problem. Machine learning has much more to offer. If you have relational/table data, small datasets, strict restrictions on computation resources, or training time or model interpretability, consider using other algorithms and approaches. 明智地应用深度学习。 尽管深度学习是一个非常通用,引人注目的且广泛适用的框架,但它并不是数据科学家可以使用的唯一工具。 这也不是解决任何问题的灵丹妙药。 机器学习还有更多的选择。 如果您有关系/表数据,小的数据集,对计算资源的严格限制或训练时间或模型的可解释性,请考虑使用其他算法和方法。

- Reach out to us if you need any help in solving machine learning challenges: datalab@cinimex.ru 如果您在解决机器学习挑战方面需要任何帮助,请与我们联系:datalab@cinimex.ru

翻译自: https://www.freecodecamp.org/news/machine-learning-as-a-service-with-tensorflow-9929e9ce3801/